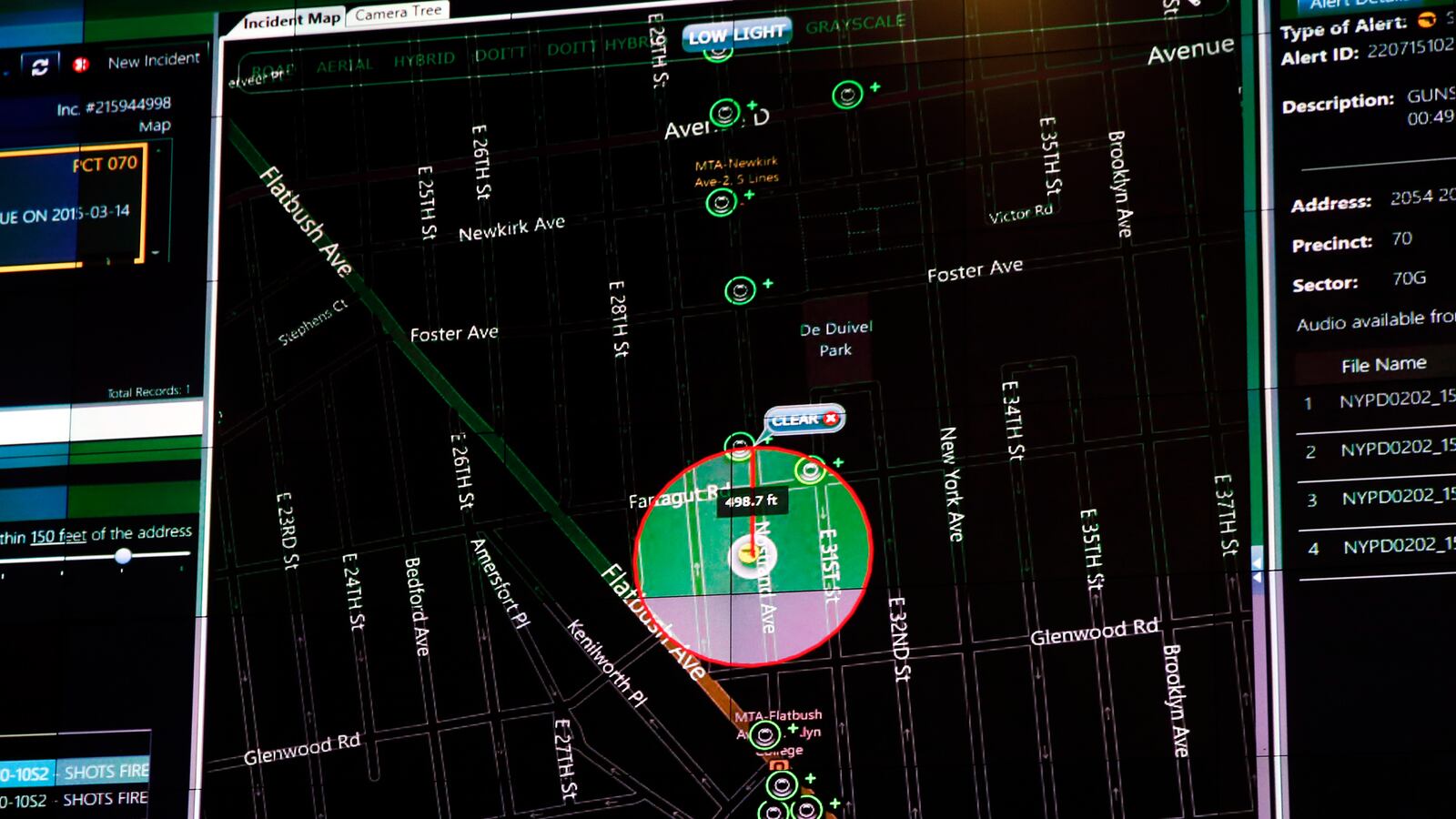

Analysts for ShotSpotter, a system that uses microphones to identify the location of gunshots and alert police across America, “frequently modify alerts at the request of police departments—some of which appear to be grasping for evidence that supports their narrative of events,” according to court filings reviewed by Motherboard. Motherboard reported that in one instance involving a murder in Chicago, ShotSpotter initially classified a suspected gunshot as a firecracker. But, Motherboard claimed, a ShotSpotter analyst allegedly overrode the algorithm and “reclassified” it as a gunshot. Motherboard said another ShotSpotter analyst also altered the supposed shot’s coordinates to a point closer to where a suspect’s car had been seen in surveillance footage. In another case, Motherboard said ShotSpotter analysts overruled the algorithm—which initially classified a sound picked up by microphones as helicopter rotors, not gunshots—at the request of Rochester, New York police. The suspect’s conviction was later tossed after a judge questioned the reliability of ShotSpotter’s technology.

Editor’s Note: Motherboard updated its original story to reflect that ShotSpotter did not alter the gunfire location in the Chicago murder. Lawyers for ShotSpotter provided court documents showing “that ShotSpotter did not change the location of the gunfire between its real-time alert on the night of the shooting and its later detailed forensic report, whether to fit the police’s narrative or otherwise. On the night of the shooting, ShotSpotter’s Real-Time Alert geolocated the shots at the intersection of East 63rd Street and South Stony Island Avenue. Later, ShotSpotter’s expert analyzed the audio and prepared a Detailed Forensic Report, which geolocated the gunfire at the same intersection.”